Too Soon?

The frustrations and realities of re-imaging orders that come back around quickly.

I’m fond of PET scans. Not just because of the modality’s intrinsic merits; for whatever reason, it seems that most rads in our field find PET beyond their skillset and/or not worthwhile to learn. Being an exception to that rule makes one something of a wizard. I’d be entirely content to log on any given morning and see my worklist chock-full of them.

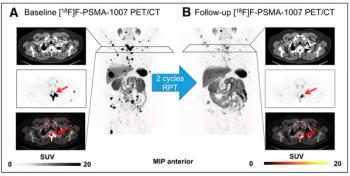

Only a couple came my way this past week, and one of them stumbled out of the starting-gate: I saw that the same patient had been PET-ed only about a month before. No diagnosed malignancy, just “follow-up” for an active sub-centimeter lung nodule.

Cynical thoughts flew through my mind: How did this re-scan get authorized, so soon after the prior? Who thought this was a good use of resources, not to mention radiation dose to the patient? No way was it getting reimbursed. As the two studies’ data percolated through the software we use for PET, I shot an instant message to a colleague about the matter, and we groused together for a bit.

The studies finished loading, and the nodule was same as it had been…but, hush my mouth, there were now a couple of enlarged/active lymph nodes in the mediastinum. Quite a change, given the short interval. I went from disapproving to mystified: Did the referrer somehow know that a rapidly progressing cancer was present? Or had s/he just blundered into identifying significant new pathology earlier than others would have?

As many readers can probably attest, most of the time we receive a “too soon” follow-up exam, it does, indeed, turn out to be too soon for the study to be useful. My most frequent example is pulmonary nodules on regular ole’ CT. A prior study spelled out that the next scan should be in six months, perhaps, or even three…and here we are with a 1-month follow-up. Congratulations, the lesion is stable, but it’s too soon for that to mean anything. What now, O smarter-than-Fleischner referrer?

For more coverage based on industry expert insights and research, subscribe to the Diagnostic Imaging e-Newsletter

The most contentious I ever saw this get was in the hospital where I did my fellowship. Some clinicians there thought nothing of getting daily follow-up CTs for pancreatitis—on a couple of occasions more than once per day. Or machine-gun CTAs: “Is there a pulmonary embolism?” “No.” “Okay, the patient coughed again. How about now?” This sufficiently aggravated the rads that we almost saw action from leadership. Almost.

Being the rad sounding the “too soon” alarm isn’t easy. It’s far from a unique circumstance, so you always risk sounding like a broken record. It also can make you look whiny, like a kid who doesn’t want to do his chores…from the perspective of the referrers who are convinced they “need” study X done, or from members of your own team who think they’re telling you something new with statements like “This is a service industry,” and “We have to give the referrers what they want.”

Indeed, without such team members backing you up, any “too soon” protestations you make are wasted breath. Most rads learn this sooner rather than later and wind up just muttering under their breath as they read the meaninglessly stable excess imaging. Or griping to colleagues, as noted above.

Over the years, I’ve heard of patient radiation-dosage tracking initiatives that one might hope would have some sort of impact on this. Clinicians trying to order a patient’s sixth triphasic-contrast CT in a year might, theoretically, encounter some automated pushback. Maybe even get flagged for a talking-to. Somehow, that doesn’t seem to be happening.

I think the strongest antidote for too-soon re-imaging is, like it or not, financial. Pure-capitalist society, 100-percent socialized medicine, or anywhere in between, the equipment, contrast, electricity, and manpower are being paid for somehow. Resources are limited; hence, overuse has consequences.

In other words, whoever’s doing extra work or allocating excess resources but isn’t getting paid for it is going to notice. Human nature being what it is, I suspect they’ll react more strongly to such circumstances than they did when they heard that patients were getting radiated too much.

So maybe the government or insurance companies get more aggressive/creative in finding new excuses to deny authorization for imaging, or retroactively de-authorize studies that have already been done. Whoever’s most directly impacted (collecting the billing) is going to be most likely to seek changes to the situation.

Diagnosing the problem doesn’t seem too hard: The people ordering excess imaging don’t face negative consequences for doing so. About the worst thing they have to deal with is the bureaucratic hassle of getting something approved—and since they can delegate that hassle to others (office-staff), it’s not even a speed-bump to the referrer.

Once their order gets past the authorization-goalie, they never have to worry about it again. At that point, either the hospital or the rads themselves have the joy of trying to collect for the diagnostic work. If the study doesn’t get reimbursed, that’s red ink on the ledger for others, never the referrer.

It seems to me that closing this loop and providing real negative feedback would be the most reliable way of bringing about change. Some sort of referrer-QA system, perhaps, in which repeated offenders could see they were racking up bad stats…enough of which might result in corrective action.

Or maybe just impose a “you partied, you pay” rule: Referrers ordering “too-soon” follow-ups or other unwarranted imaging that doesn’t get reimbursed wind up seeing the negative financial impact imposed on their books, rather than the rads who compliantly did the work that got put in front of them.

By all means, there could be an appeals process so the referrers had a chance to explain why they thought their orders were justified…after all, as evidenced by my PET, sometimes it isn’t too soon.

Follow Editorial Board member Eric Postal, M.D., on Twitter,

Newsletter

Stay at the forefront of radiology with the Diagnostic Imaging newsletter, delivering the latest news, clinical insights, and imaging advancements for today’s radiologists.